20 years ago, if we asked you about AI you would probably think we were talking about the movie Artificial Intelligence. Yeah, the one with Haley Joel Osment. Even then, the concept of computers resembling humans was so outlandish that it only fit in sci-fi films.

Fast forward to the present day, and check out this poem. It’s a poem about Elon Musk in the style of Dr. Seuss. And it was completely written by AI. 🤯

The world of AI is much-discussed but often misunderstood. The applications seem endless, but the tech itself frequently seems too theoretical to actually be put to use. But in June 2020, OpenAI released beta-access to their Natural Language model called GPT-3. The release was big news in the AI world, and we think it changes the way people can solve real-world problems with AI.

So let’s take some time and talk about what GPT-3 is, what it can do, and how it can be utilized in the world of support to help people and companies.

What is GPT-3?

GPT-3 is short for Generative Pre-trained Transformer 3, a natural language model built by OpenAI that uses machine learning to create human-like text.

For those of us who don’t speak computer-science jargon, that means GPT-3 is an artificial intelligence system that allows your program to understand text written by a human, and respond with text as though it had been written by a human.

For example, you could prompt GPT-3 with a question such as “Describe who won the FIFA World Cup in 2006?”, and GPT-3 would respond “The 2006 FIFA World Cup was won by Italy”. But that is a severe simplification of GPT-3’s capabilities (we’ll get to that in a second).

GPT-3 is able to produce this human-like text with an artificial neural network consisting of 175 billion model parameters. That massive capacity enables GPT-3 to become really good at recognizing, understanding and producing content that is remarkably human.

What can GPT-3 do?

So, how can GPT-3 actually be used?

Part of the power of GPT-3 is in its flexibility. OpenAI designed it to be applicable for a variety of uses: content creation, translation, semantic search, and more. Developers like Arram Sabeti have tested GPT-3's ability to write songs or poems. The Guardian had GPT-3 write an essay. It can even be used to design websites, generate resumes, or even create memes.

The applications are seemingly endless, but there’s a catch.

GPT-3 doesn’t work like magic out of the box. It needs some extra inputs or “programming” to make it work as intended and inform the model on what to produce.

Let’s look at our oversimplified example again. If you want to GPT-3 to return an answer on “Who won the FIFA World Cup in 2006?”, you may need to first “train” it by first showing your preferred Q&A format:

Q: Who won the Superbowl in 2010?

A: The 2010 Superbowl was won by the New Orleans Saints.

Q: Describe the Major League Baseball team in San Francisco?

A: The San Francisco Giants are a Major League Baseball team based in San Francisco, California.

Then when you prompt GPT-3 with:

Q: Describe who won the FIFA World Cup in 2006?

It knows to answer:

A: The 2006 FIFA World Cup was won by Italy.

GPT-3 isn’t perfect. It will still make silly mistakes and won’t sound 100% human, as admitted by OpenAI co-founder Sam Altman.

This has led some to conclude that GPT-3’s isn’t useful in the world of customer support. We disagree.

How GPT-3 can be used for Customer and Product Support?

Let’s start with the idea that GPT-3, and AI in general, can be used to impersonate and replace human support teams. That’s the wrong approach.

GPT-3 isn’t flawless, and people will pick up on that. In fact, that’s an inherent problem with chatbots in general. This problem is worsened if you pair GPT-3 with other platforms that are not designed to resolve real issues. That combo just results in a bunch of angry customers who have “chatted” with a bot that didn’t actually fix anything.

Instead, the right way to approach GPT-3 is how it can help augment humans. This ideal, called the Centaur model, is the idea that humans + computers will yield a better result than either humans or computers alone could.

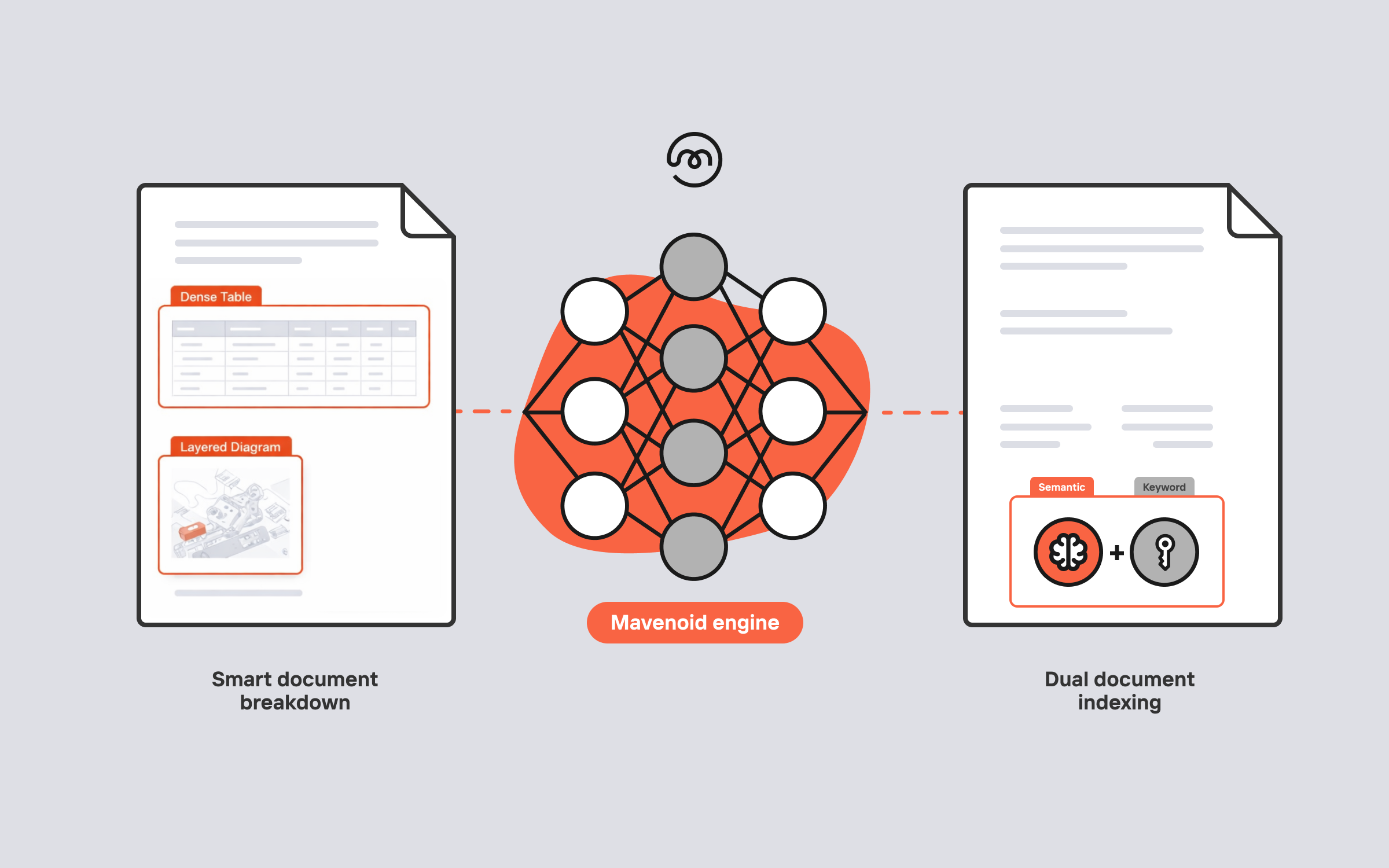

With this framework in mind, we took a look at how GPT-3 could improve our own product and workflows at Mavenoid.

One major challenge in building effective support automation is that it takes time and expertise.

Typically this involves reading lots of manuals, reviewing support tickets, scraping online forums, and organizing that data into errors, symptoms, solutions, and more.

To give you a sense, here’s a comparatively simple support flow for headphones. It took a human a full day or work to compile and organize the data in this flow that is built to effectively troubleshoot the headphone’s repetitive issues. Each of these nodes has paragraphs of text connected to it.

You might have personally worked hundreds of support issues on a given product. You might have thousands of issues in your CRM's records. Sifting through all that history, identifying patterns, recognizing what you should focus on, and deciding how to organize it? That's hard, tedious, and time-consuming. It's not uncommon for that process to take a few weeks for a suite of products.

While sifting through large data sets and finding the trends and relationships is tough for humans, it's simple for computers. Why not let them do that initial grunt work, and let humans simply polish a model up?

That’s why we were so excited about GPT-3. Those billions of data parameters that GPT-3 is trained on? They include discussions of product issues and resolutions happening across the internet. By pairing that with our tools to ingest first-party ticket data, we could save thousands of hours of time and effort.

But remember how we said GPT-3 needed to be “trained” to tackle specific tasks? Getting it to work for product support isn’t so simple. Let’s just say our first few attempts gave us a chuckle.

Without training, GPT-3 often produced outputs that weren’t particularly useful either, like this test where it suggested to call a number that doesn’t actually work.

So we got to work and built a prompt optimization framework to help train GPT-3 for support.

With some tweaking, we can leverage GPT-3 to help create product assistants that closely resemble ones created solely by people, and do it in under an hour. The example model below was completely generated by GPT-3. Each node contains auto-generated text.